Contributions

- We propose a cross-embodiment system—supporting wheeled robots, quadrupeds, and humanoids—that incrementally builds a multi-layer representation of the environment, including room, viewpoint, and object layers, enabling the VLM to make more informed decisions during the object navigation.

- We design an efficient two-stage navigation policy based on this representation, combining high-level planning guided by the VLM's reasoning and low-level exploration with VLM's assistance.

- Our system not only supports standard object navigation but also enables conditional object navigation—such as navigation conditioned on object attributes or spatial relations—through its multi-layer representation.

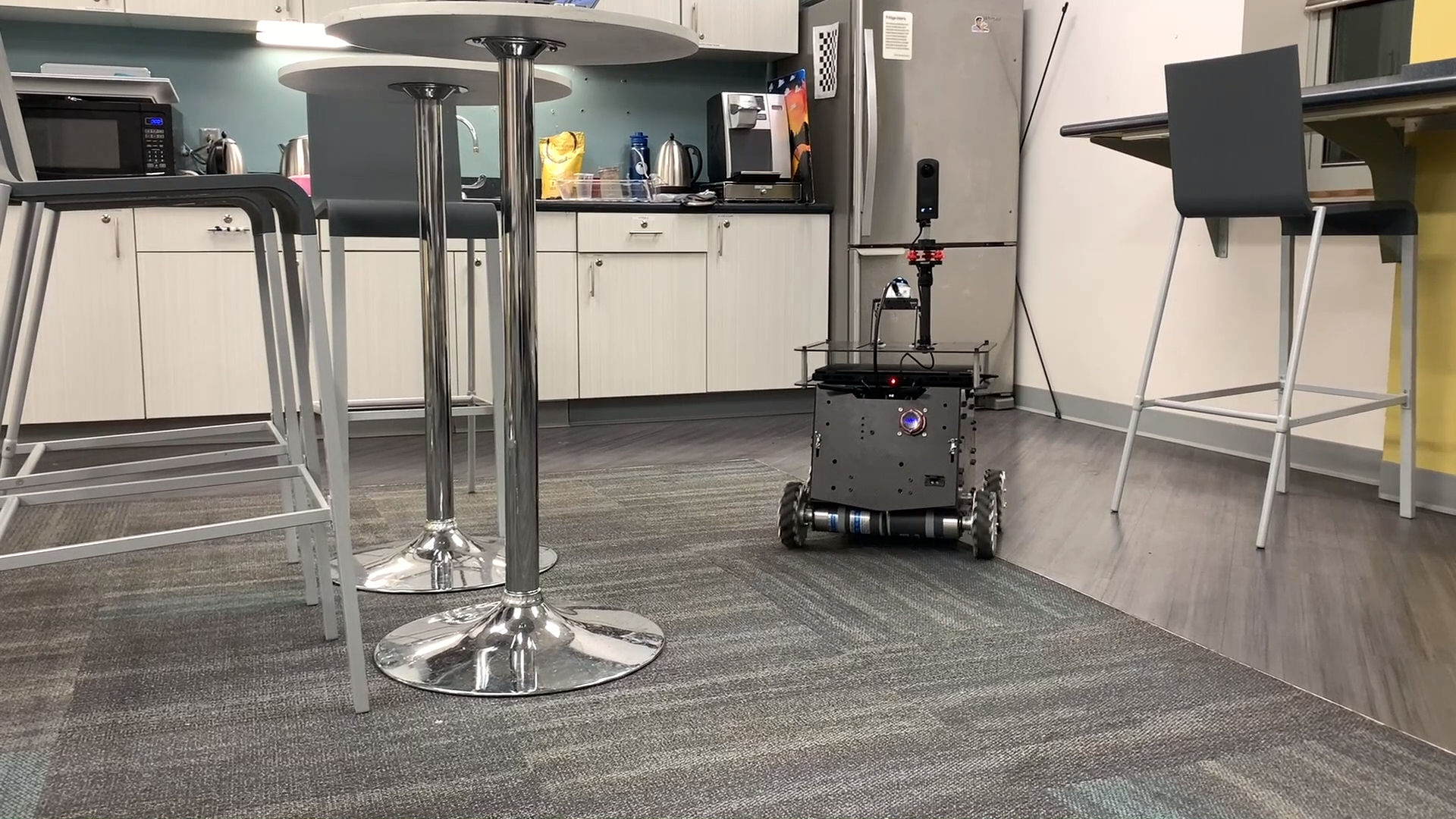

- We conduct extensive real-world evaluations, including three long-range tests spanning an entire building floor and 75 unit tests conducted within multi-room environments (51 on wheeled robots, 18 on quadrupeds and 6 on humannoids).

Wheeled Robot:

Wheeled Robot:

Quadruped:

Quadruped:  Humanoid:

Humanoid: